Next-Gen Alexa Multimodal Assistant

August 2025

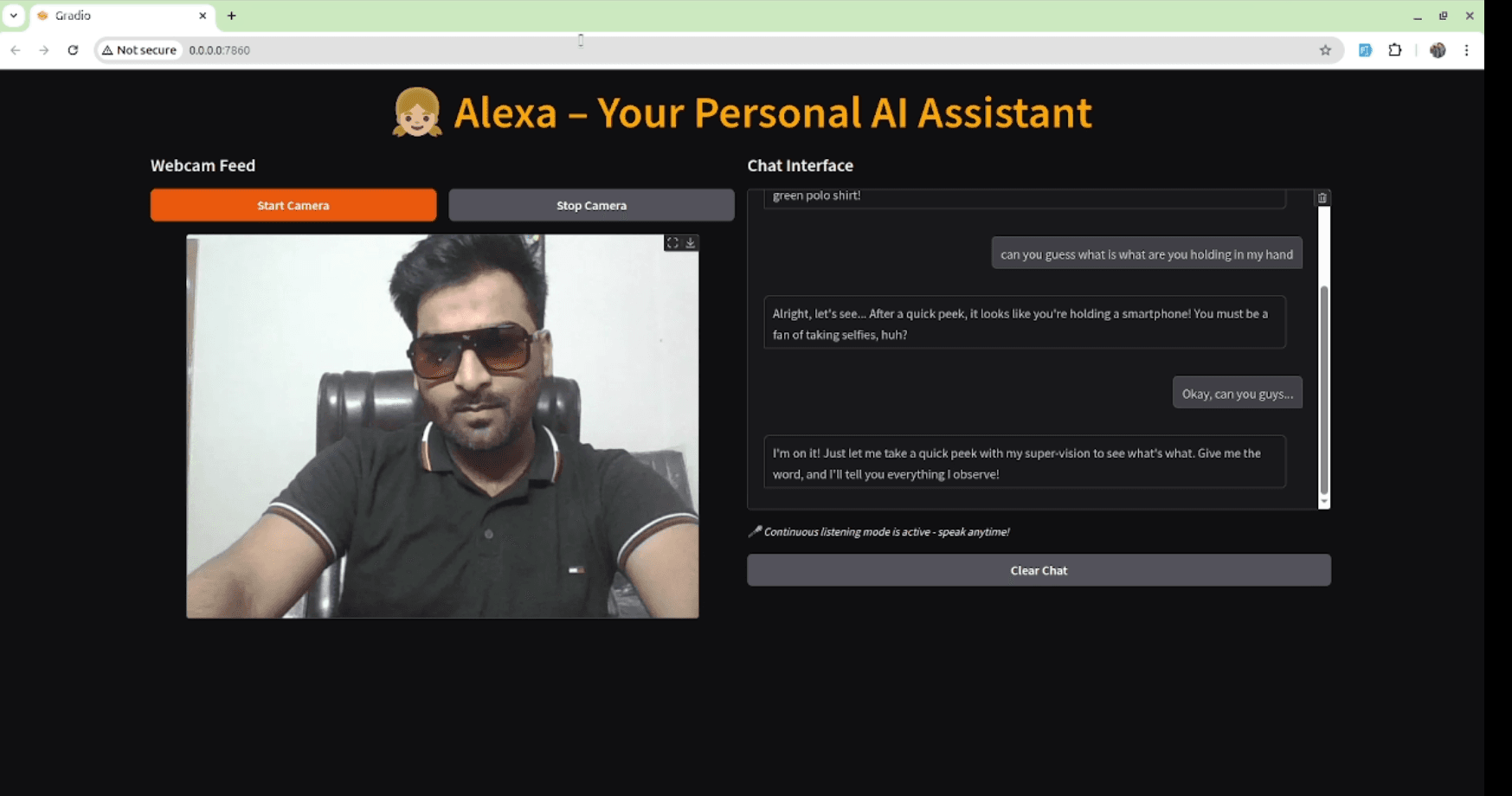

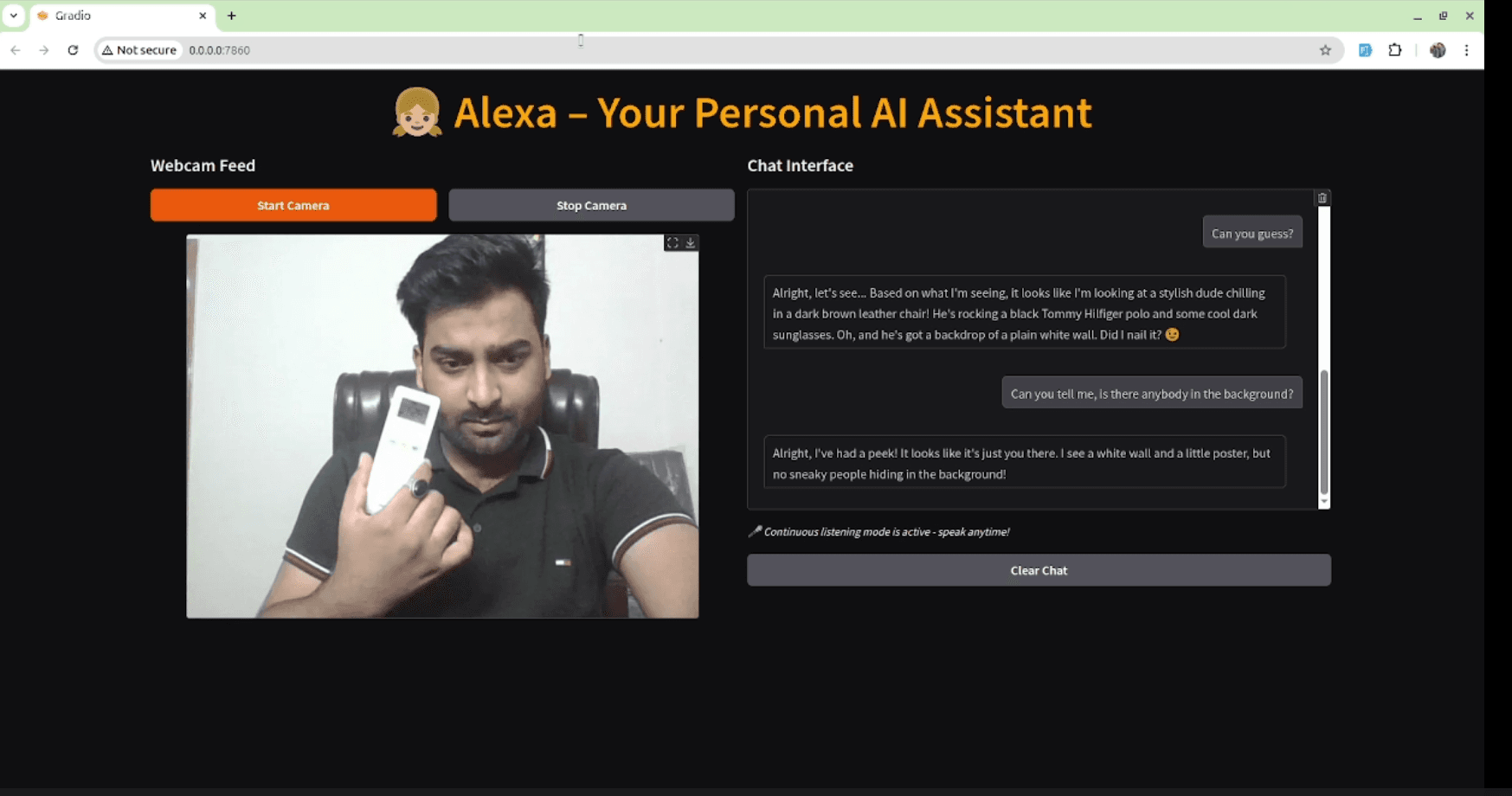

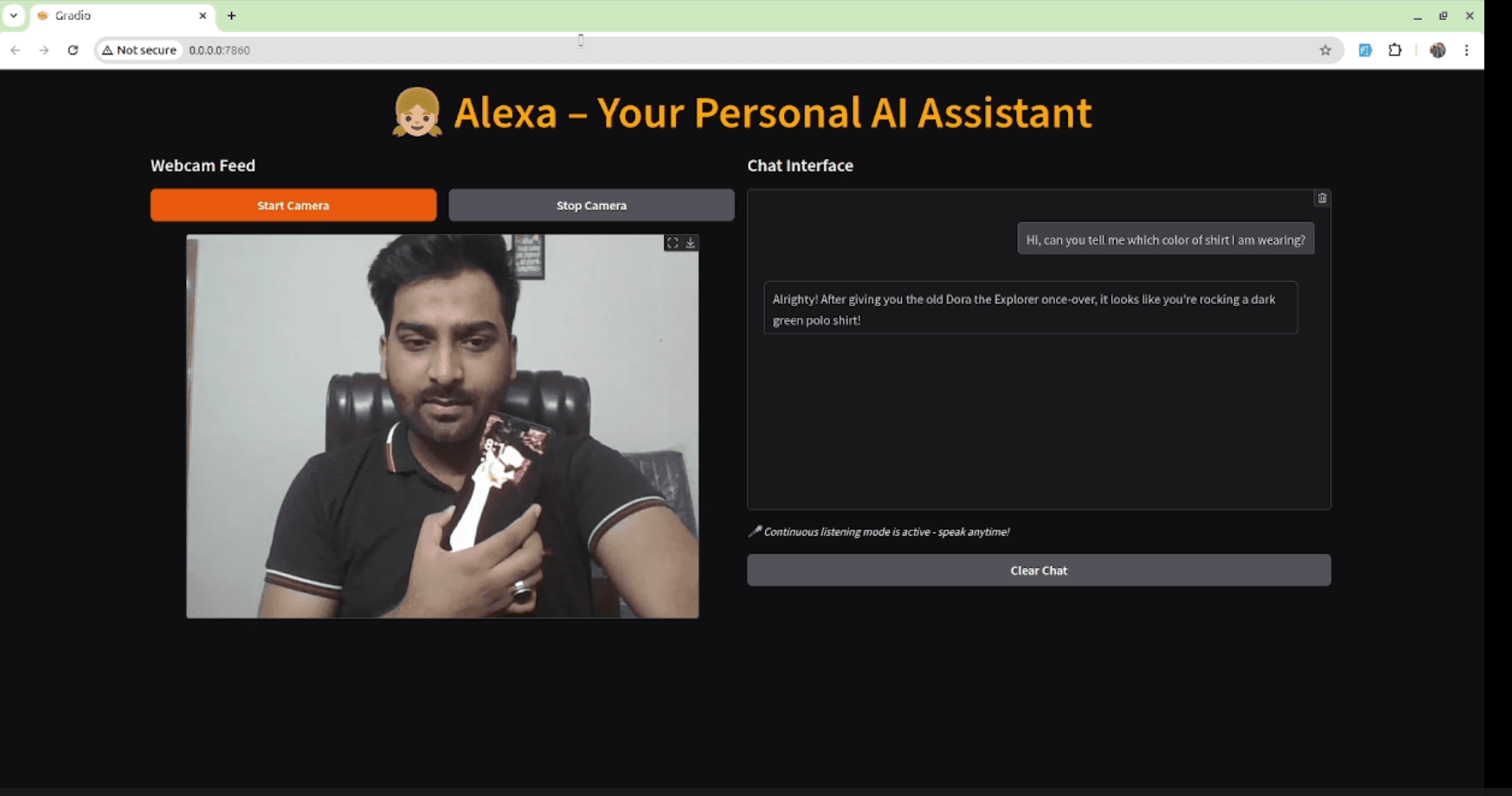

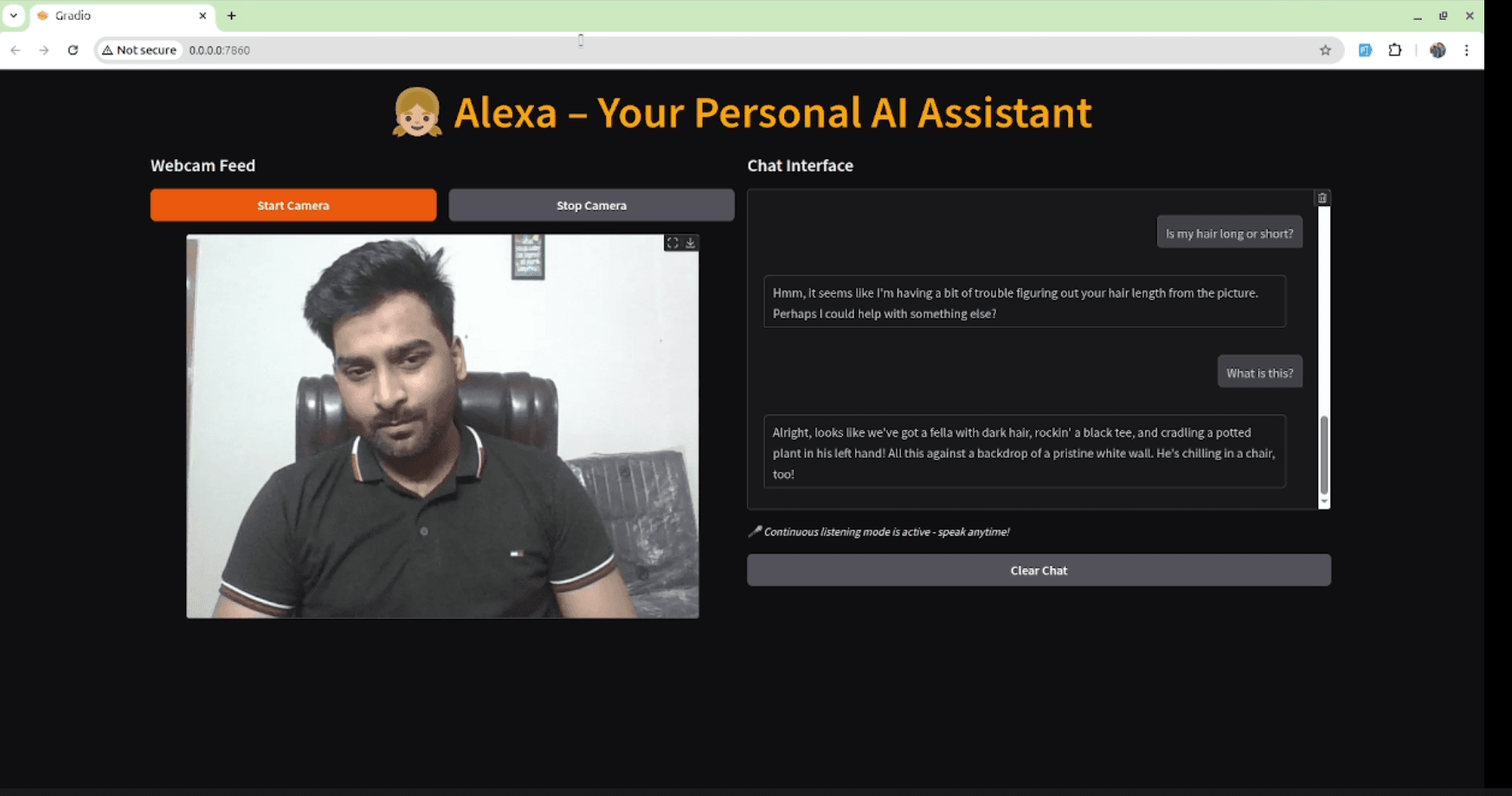

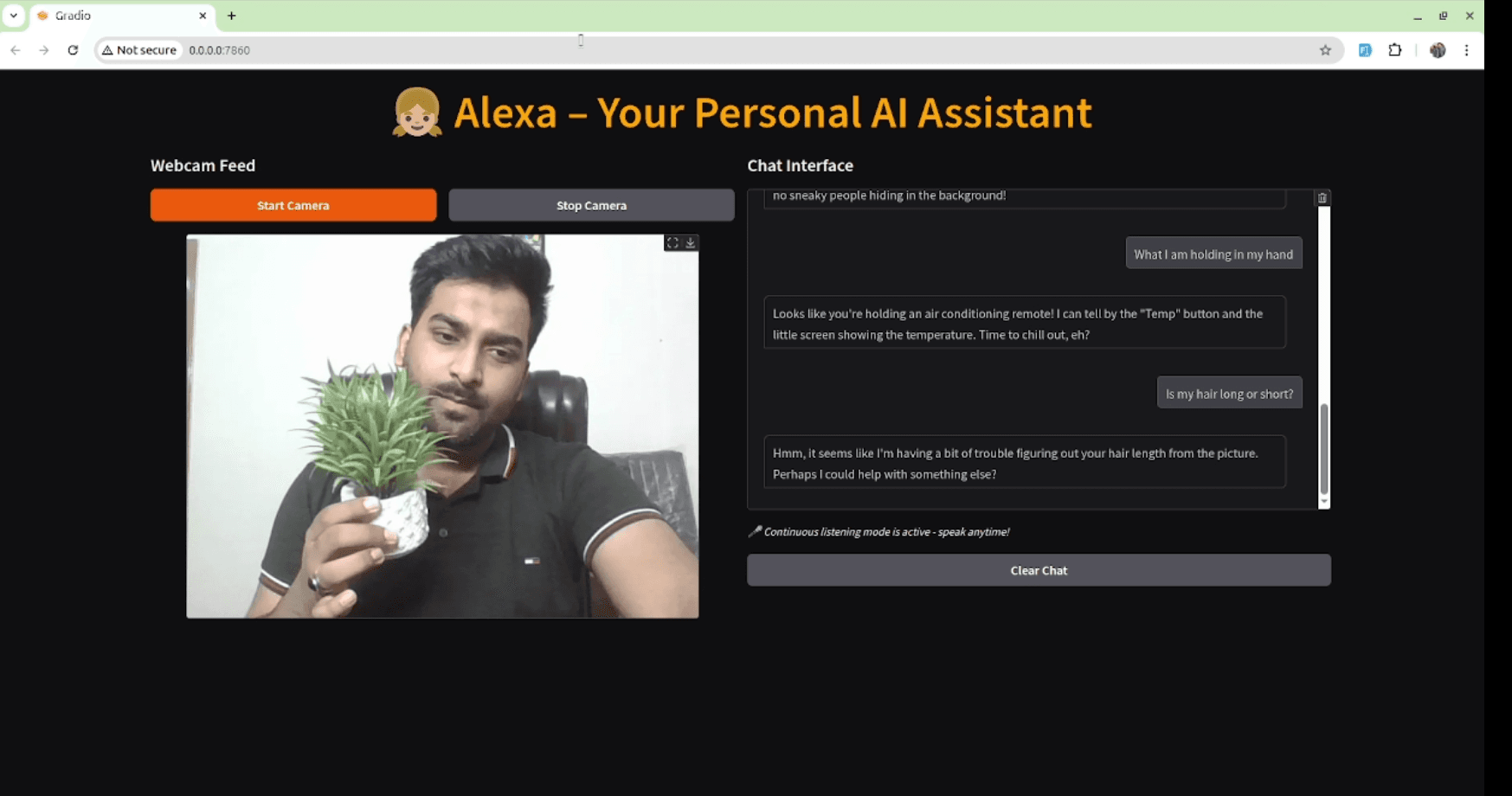

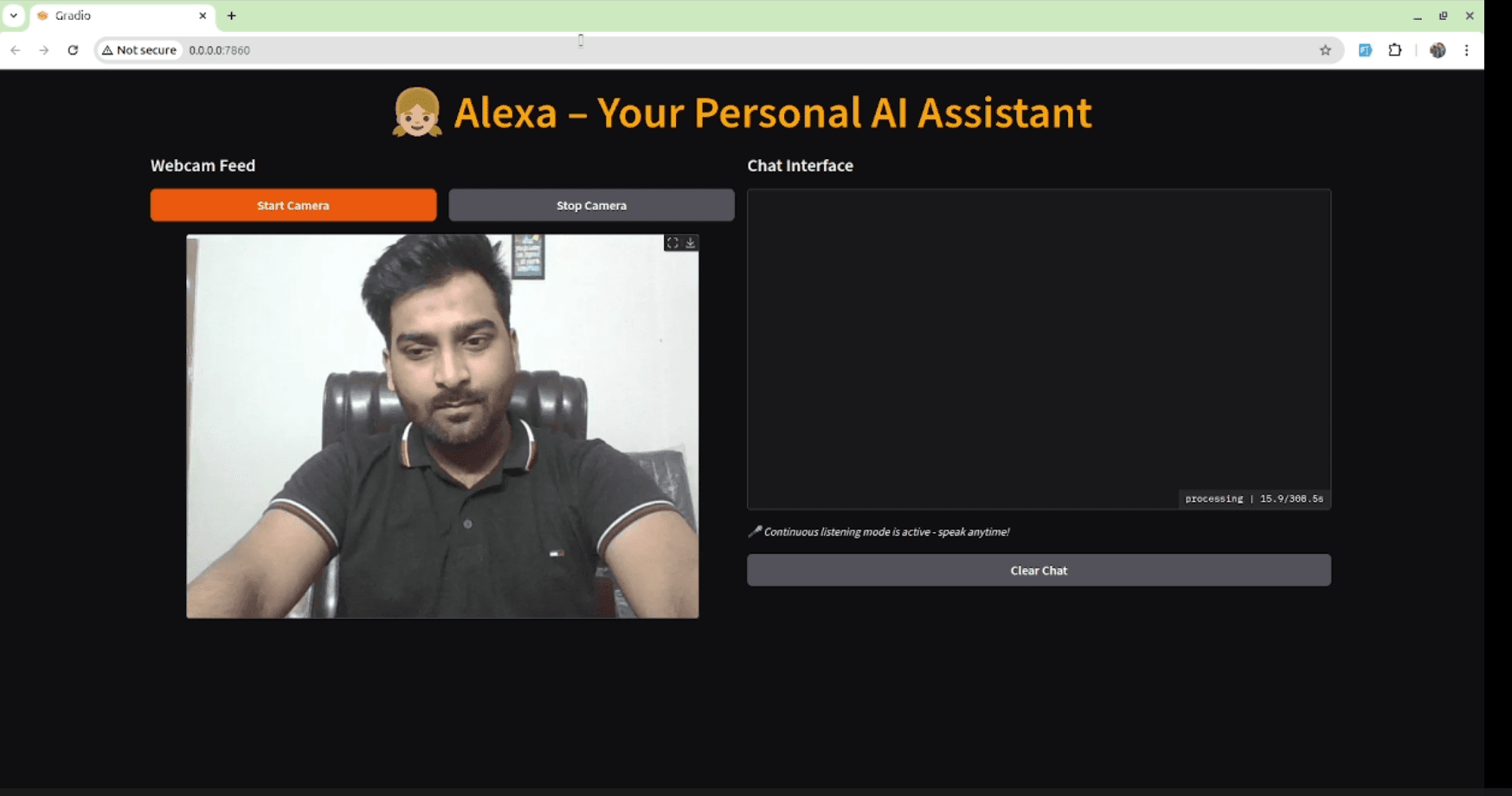

A next-generation Alexa-style AI assistant with voice, vision, and video interactions powered by multimodal AI and agentic RAG.

Project Demo

Technologies Used

Project Links

Project Overview

This project reimagines the voice assistant experience by combining voice, vision, and retrieval-augmented generation into a unified multimodal AI system. Unlike traditional assistants, it can not only respond with natural speech but also leverage a webcam to see the user, recognize gestures, expressions, and objects, and provide context-aware answers. Users can talk to it, show things to it, or even interact with it in video-call style. Built with LangGraph, Groq, Google GenAI, OpenCV, SpeechRecognition, and ElevenLabs, it blends real-time multimodal awareness with conversational intelligence for a futuristic assistant experience.

Key Features

Voice Interaction

Talk to the assistant and receive natural, human-like speech responses.

Vision Awareness

Understands shirt colors, gestures, objects, and facial expressions via webcam.

Agentic RAG

Retrieves knowledge from personal sources for context-aware answers.

AI in Video Form

Interact with the assistant in a video-call style interface.

Challenges

- •Integrating multimodal streams (voice, video, RAG) in real time

- •Ensuring smooth webcam + mic handling across platforms

- •Maintaining conversational memory with context-aware retrieval

Key Learnings

- •Built a seamless multimodal pipeline with vision, audio, and RAG

- •Explored LangGraph for agentic AI flows

- •Enhanced real-time AI-human interaction design with video presence

Project Gallery

Related Projects

You might also be interested in these projects.

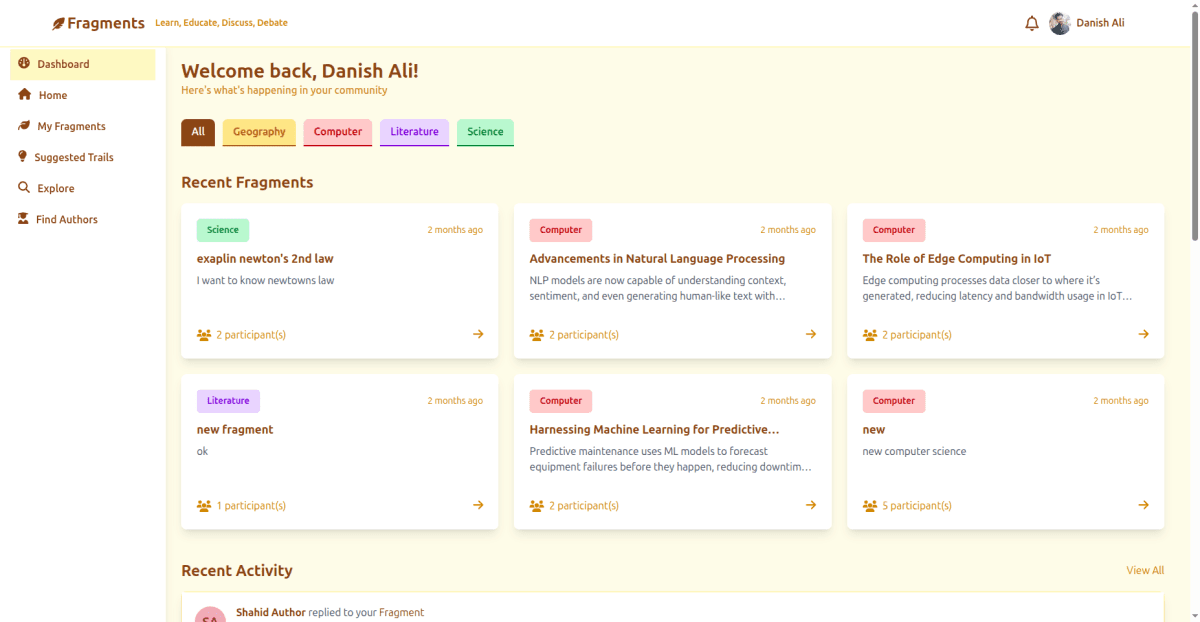

Fragments Trails – Educational Q&A Platform

July 2025

A full-featured educational Q&A platform with AI moderation, personalized feeds, Trails, and admin/moderator control.

Scalable E-Commerce Platform

December 2024

A scalable full-stack e-commerce platform built with the MERN stack, TypeScript, Docker, Redis, Stripe, Firebase, Cloudinary, and more.

Agentic AI Researcher App

September 2025

An AI-powered research assistant that searches arXiv, reads papers, generates insights, and writes new research in LaTeX PDF format.